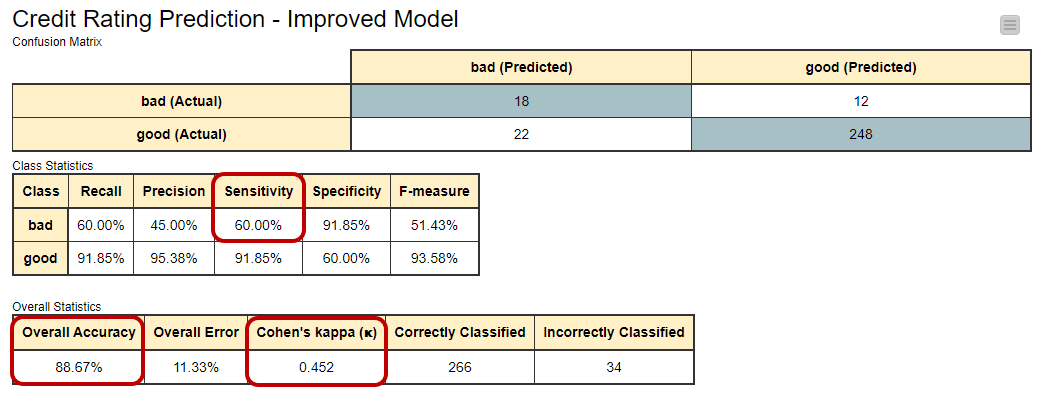

Cohen's kappa in SPSS Statistics - Procedure, output and interpretation of the output using a relevant example | Laerd Statistics

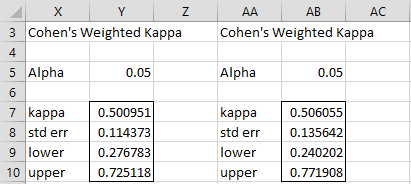

Candidates' inter-rater reliabilities: Cohen's weighted kappa (with... | Download Scientific Diagram

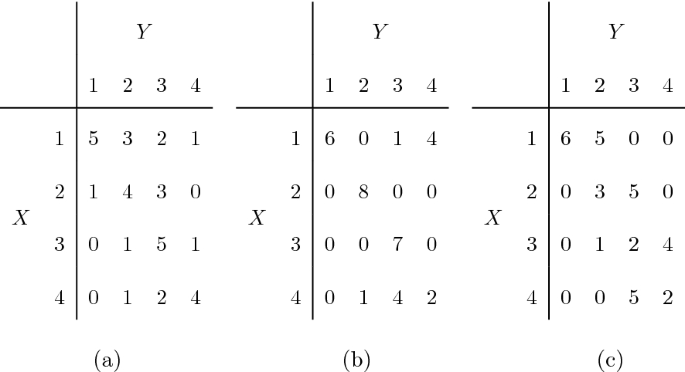

Pairwise classifications of two observers who rated teacher 7 on 35... | Download Scientific Diagram

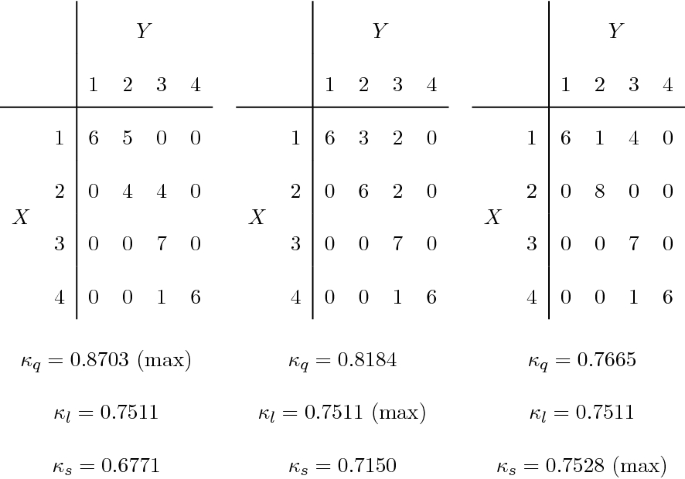

![PDF] Cohen's quadratically weighted kappa is higher than linearly weighted kappa for tridiagonal agreement tables | Semantic Scholar PDF] Cohen's quadratically weighted kappa is higher than linearly weighted kappa for tridiagonal agreement tables | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/5df092de279231383db41edabbc6e93624b302b6/2-Table1-1.png)

PDF] Cohen's quadratically weighted kappa is higher than linearly weighted kappa for tridiagonal agreement tables | Semantic Scholar

Summary measures of agreement and association between many raters' ordinal classifications - ScienceDirect

Inter-Annotator Agreement (IAA). Pair-wise Cohen kappa and group Fleiss'… | by Louis de Bruijn | Towards Data Science

COHEN'S LINEARLY WEIGHTED KAPPA IS A WEIGHTED AVERAGE OF 2 × 2 KAPPAS 1. Introduction The kappa coefficient (Cohen, 1960; Bre

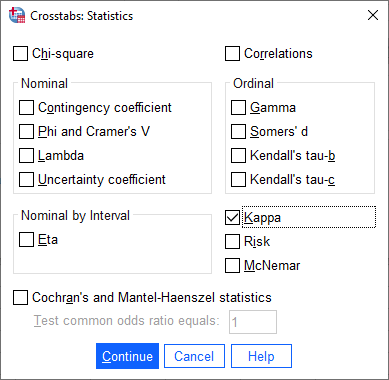

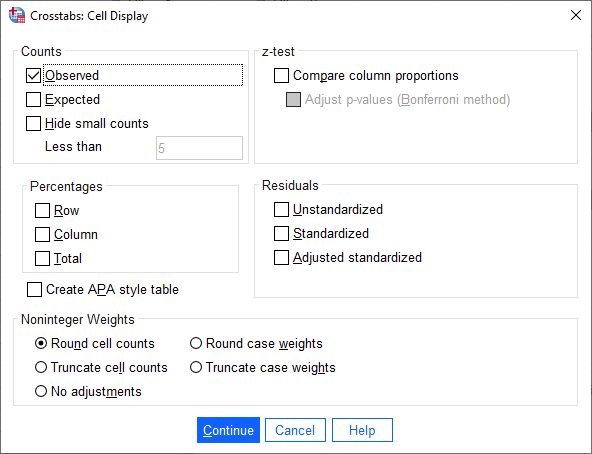

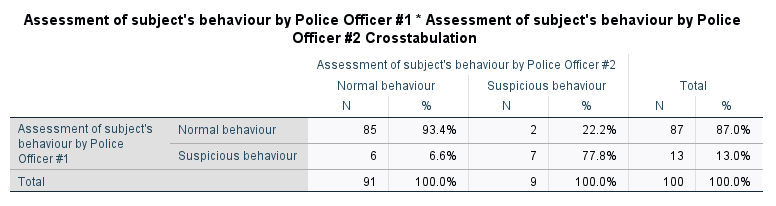

Cohen's kappa in SPSS Statistics - Procedure, output and interpretation of the output using a relevant example | Laerd Statistics

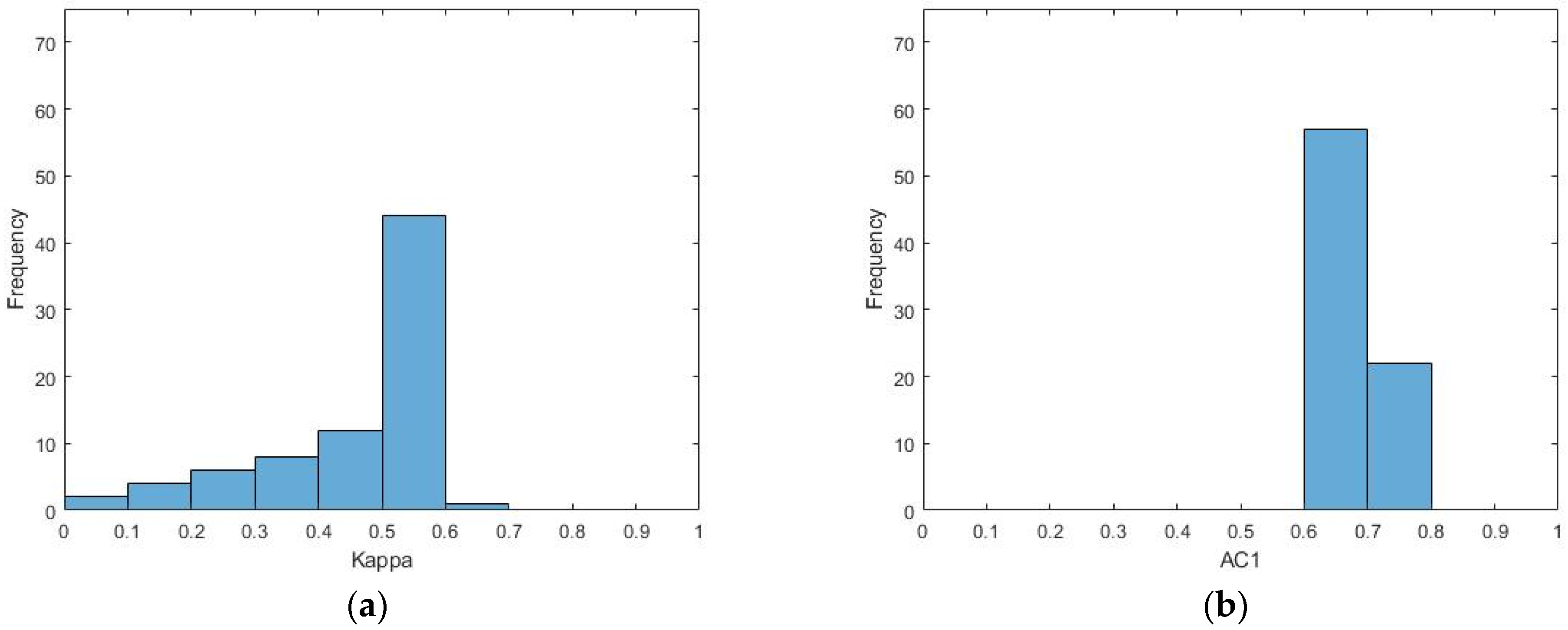

Symmetry | Free Full-Text | An Empirical Comparative Assessment of Inter-Rater Agreement of Binary Outcomes and Multiple Raters

Interrater agreement of two adverse drug reaction causality assessment methods: A randomised comparison of the Liverpool Adverse Drug Reaction Causality Assessment Tool and the World Health Organization-Uppsala Monitoring Centre system | PLOS

![PDF] Five ways to look at Cohen's kappa | Semantic Scholar PDF] Five ways to look at Cohen's kappa | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/037fd6c1fe0c2624f2d0287c3d527f0fd4dac953/1-Table1-1.png)